How your ad is delivered to consumers can greatly affect ad effectiveness. A new report finds that ads managed using machine learning outperform ads that are managed by humans alone.

This begged the question in our minds: how does machine learning-managed ads compare to a third delivery method: ads that bypass the traditional media-interruption process completely. This is a type of ad delivery Dabbl offers.

Machine vs Human Ad Delivery

IPG Mediabrands’ Magna and IPG Media Lab along with true[x] “utilized true[X]’s Up//Lift measurement technology to measure three campaigns over a month to find out which performed better: campaigns managed with or without machine learning.”

Ad industry publisher Campaign quotes Jamie Auslander, true[X]’s senior-VP of research and analytics:

“What machines are able to do is make ads dramatically more effective because they can identify receptive consumers, and when they do that, they can significantly increase brand impact”

Non-media-interruption Ad Delivery

At Dabbl, we’re seeing even more dramatic ad effectiveness via a very different delivery method.

Giving consumers more control makes ads dramatically more effective because consumers are significantly more receptive when they choose to engage on their terms. This significantly increases brand impact.

Imperfect Comparison

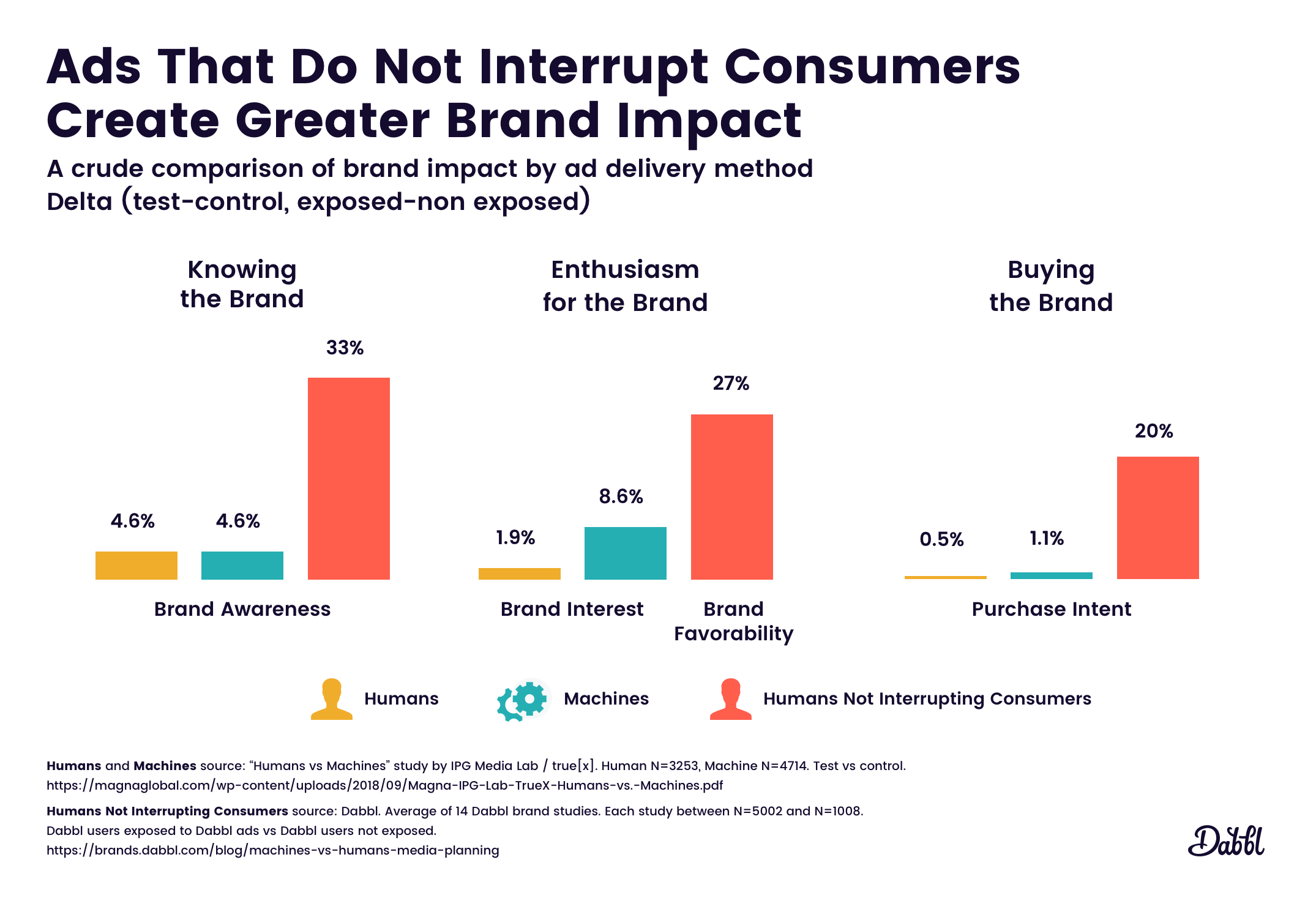

We were intrigued by the IPG Media Lab / true[x] findings. And we were curious how the brand impact performance stacked up for these three different approaches.

1) Media planning using machine learning for traditional media-interrupting ads. Ie “Machines.”

2) Media planning performed solely by humans for traditional media-interrupting ads. Ie “Humans.”

3) Humans respecting consumers’ choice with media-bypassing, permission-based ads. Ie “Humans letting humans choose.”

Obviously, this isn’t an apples to apples comparison. The core difference is that the ads are two different types of ads. There are some basic similarities, but see the table below for differences.

| true[X] ad | Dabbl ad |

|---|---|

| User opts-in during media interruption | User opts-in at a time of their choosing |

| Media interrupted | Media NOT interrupted |

| Ad budget shared with publishers | Ad budget shared with users |

| :30 seconds | Avg :38 seconds |

| At least 1 interaction with creative | Avg 6.75 interactions with creative |

| 100% share of screen | 100% share of screen |

But we feel this is a core part of the calculation. As you can see above, this third ad delivery method (seen in the Dabbl ad column) outperforms the first two media-interruption methods (seen in the true[x] column) in greater average number of engagements and longer time spent.

And this level of consumer receptiveness to engage with the ad is core to how delivery methods perform.

How The Three Methods Stack Up

I acknowledge this is a crude comparison. Here’s what we did. We took the Machine vs Human published brand impact results for their three campaigns and compared them to the best of our abilities to the average brand impact results for Dabbl’s last 14 brand studies.

These studies compare brand relationship measurements for Dabbl users who were exposed to Dabbl ads to Dabbl users not exposed to those ads. The brand studies ranged between 5,002 and 1,008 respondents each.

Knowing the Brand

IPG Media Lab / true[x] and Dabbl both measured brand awareness, seen below.

Machines: +4.6%

Humans: +4.6%

Humans Not Interrupting Consumers: +33%

Enthusiasm for the Brand

IPG Media Lab / true[x] measured brand interest and brand preference. Below is their brand interest measurement, the most impressive of the two. Dabbl measured brand favorability.

Machines (brand interest): +8.6%

Humans (brand interest): +1.9%

Humans Not Interrupting Consumers (brand favorability): +27%

Buying the Brand

Purchase intent:

Machines: +1.1%

Humans: +0.5%

Humans Not Interrupting Consumers: +20%

The Conclusion

What was the conclusion IPG Media Lab / true[x] came to?

In their report they highlight that “the hidden variable is… AD RECEPTIVITY.”

We agree.

And looking at the above, our conclusion is that there’s nothing that increases consumer receptiveness quite like giving consumers the control to choose when and how they view your ad.

Whether ads are planned via humans or machine learning, if the ads interrupt people’s media, consumers’ receptivity to your ads is lower than when they choose to view it.

Or stated another way, when you remove the media (interruptions) from “media planning”, you get greater consumer receptivity and greater brand impact.

Dabbl cofounder and CEO Susan O’Neal lays out why this works in a video here. You can give it a try here.